[toc]

Introduction

I’ve been building up my Cumulus Linux and FreeBSD virtual network over the course of the last several months. As you’ll recall from an earlier post, I decided to put a single aggregation router at the top of the network as an ingress and egress point. This allowed me to put a single static route for the entire virtual LAN and set it to the interface on the router. And it also allowed me access to the network even if I had to reboot one of the spines. However, with my recent work adding Free Range Routing (FRR) to my FreeBSD VMs, I thought of a different way to handle ingress and egress and remove a router hop.

The Virtual Network: Before

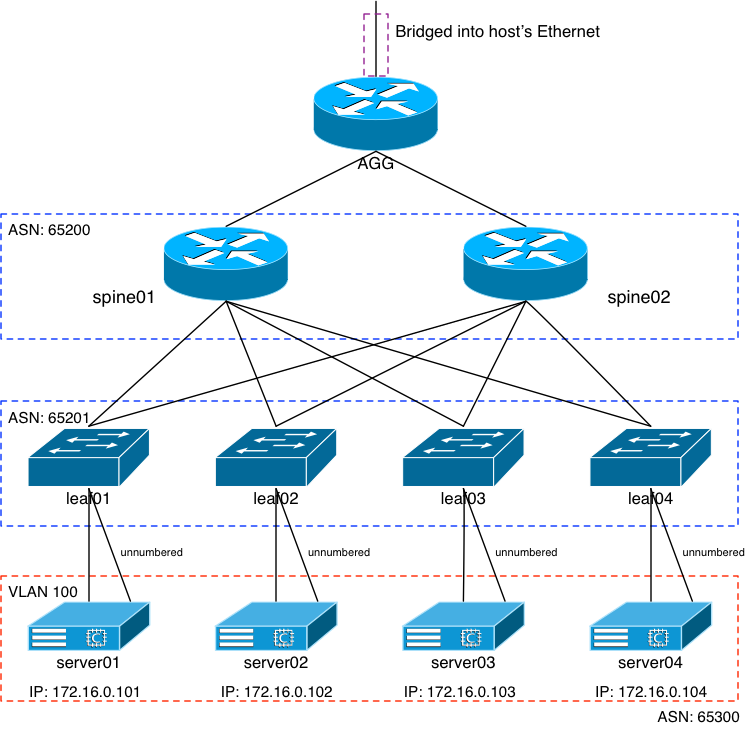

The following image has been used a bunch of times in my blog posts, but here’s what the “before” looked like:

The AGG router at the top of the network served as the ingress and egress for that virtual setup. I had a set of static routes on my host server as well as my home router, and even on my laptop all pointing towards the interface on the AGG. The AGG interface served as a single point for those static routes to be set to, and I knew I wouldn’t be doing much with the AGG router at all once it was up and running. All of my work with EVPN, VXLAN, etc would happen at the layer below the AGG. The advantage there is that the static routes wouldn’t need to change if I, say, had to reboot SPINE01.

FRR On The Router

After finishing up all of the work on my previous posts which included installing Free Range Routing on FreeBSD, I decided to try it on my router as well. I rebuilt my router as a FreeBSD device a few years ago, as outlined in this post. Since I knew how easy it was to get FRR running on FreeBSD, I installed it on the router. Once FRR was installed, I had to figure out how I wanted to peer it with the virtual network, how I wanted to handle the routing, etc.

The Physical Layout

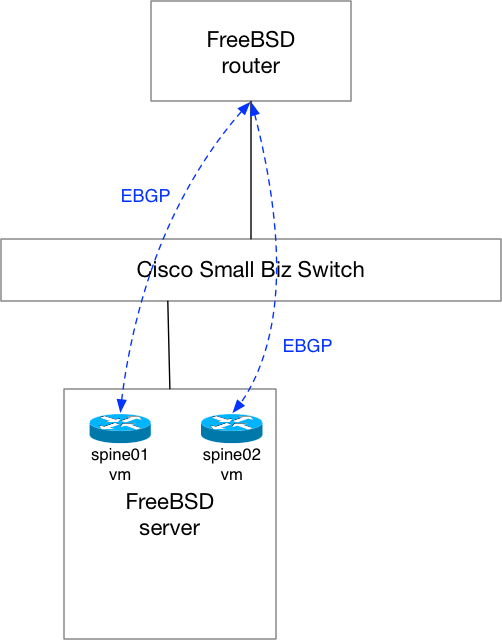

The physical layout looks roughly like this:

The router is connected to the same L2 switch as my FreeBSD hypervisor is. You can see that I have EBGP arrows drawn between the router and the two spine VMs. I’ll explain that in a bit. But ultimately, the internal interfaces on both the server and router are on the same VLAN, of course. And because of that, the router has L2 connectivity into the virtual network due to the bridging on the physical server. Since L2 exists, it’ll be simple to get the routers talking BGP with one another.

Router Configuration

Interface

The router already has an IP on my internal 192.168 VLAN. Each of the VMs on my server also have their Management Ethernet interface on that same VLAN, so that I can perform maintenance. I decided to add an alias to the router’s interface on a completely different L3 broadcast domain. Since I planned to have three interfaces on that broadcast domain, I needed to use a /29. In the /etc/rc.conf:

ifconfig_em0_alias0="10.200.0.1 netmask 255.255.255.248"

And then from the CLI so that I didn’t have to restart anything:

# ifconfig em0 inet 10.200.0.1/29 alias

BGPD

The intent was to peer two different spine routers with this device, which would make unnumbered not possible. Therefore, the /usr/local/etc/frr/bgpd.conf:

router bgp 65000 bgp router-id 10.200.0.1 bgp bestpath as-path multipath-relax neighbor 10.200.0.2 remote-as 65200 neighbor 10.200.0.3 remote-as 65200 ! address-family ipv4 unicast neighbor 10.200.0.2 default-originate neighbor 10.200.0.2 soft-reconfiguration inbound neighbor 10.200.0.3 default-originate neighbor 10.200.0.3 soft-reconfiguration inbound exit-address-family

Pretty simple: EBGP peer with two down-stream IP addresses and set EBGP multi-path. That last bit didn’t work, and I’ll explain why in a moment.

The Virtual Network: After

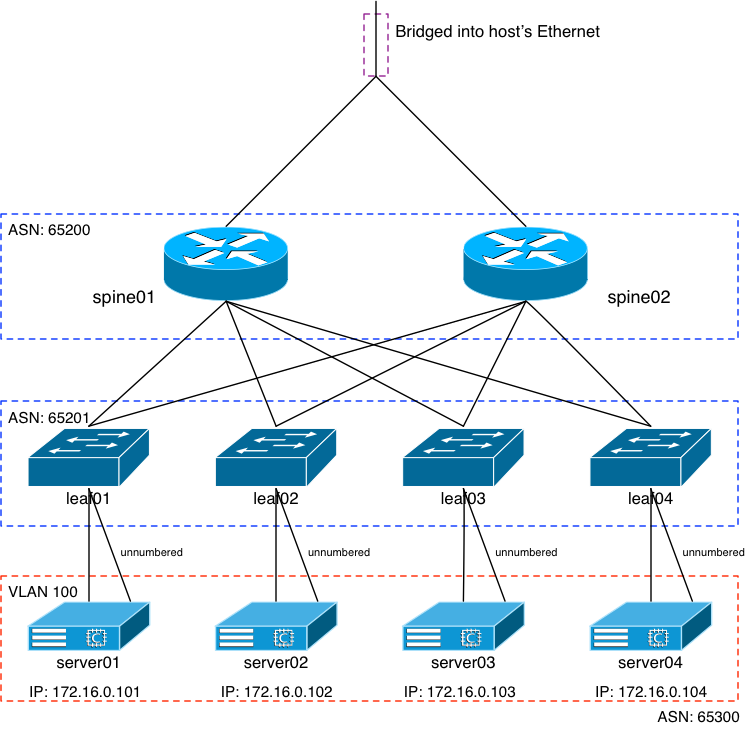

As you can see from the above diagram, it looks pretty similar to the “Before”, except the AGG layer is gone. Instead, the swp1 interfaces on the two SPINE routers that were connected to the AGG router are now bridged into the host’s Ethernet interface.

VM Config Change

The VM configuration change to make this happen was very easy. In the config files for both of the spine VMs, I merely changed which switch their “network1” interfaces were connected to. Previously, they were connected to switches that were shared with the AGG router. But after the change:

network1_type="e1000" network1_switch="private"

That “private” switch is the one that’s shared with the hypervisor’s physical Ethernet interface. In other words: the VMs would be directly connected to my internal private VLAN.

Spine Config Changes

The config changes for the spines were handled by Ansible, of course.

Host File

The lines for the two spine routers were changed to reflect the IP addresses that would live on the swp1 interfaces:

[spine] spine01 lo0=10.100.0.1 hostname=spine01 vl100=172.16.0.2 swp1=10.200.0.2 spine02 lo0=10.100.0.2 hostname=spine02 vl100=172.16.0.3 swp1=10.200.0.3

Spine Interface File

I had to change the subnet on the swp1 interface from a /31 to a /29:

- add interface swp1 ip address {{ swp1 }}/29

Spine BGP File

Since both spines will be peering with the same IP address on the router, their respective BGP configurations would be identical:

- add bgp neighbor 10.200.0.1 remote-as 65000

- add bgp neighbor 10.200.0.1 capability extended-nexthop

- add bgp neighbor 10.200.0.1 bfd

Cheating: Reseting Spine Configs Manually

In order for me to fire off the above Ansible changes and have them work properly, I would also need to write a series of commands to reset the previous configurations. In other words, remove the previous swp1 IP address and remove the previous BGP peer with the AGG router. Instead of spending the time writing those Ansible “reset” lines, I just went to each spine (there are, after all, only two of them) and manually reset those configurations. I changed /etc/network/interfaces and /etc/frr/frr.conf respectively, and then:

# ifreload -a # service frr restart

on each. Done. Once that was done, I fired off the Ansible playbook command to get the interfaces and BGP configurations added.

Did It Work?

It did. Sort of. From the respect of the spine routers it’s perfect. They’ve peered right up with my home router and are exchanging routes without an issue:

root@spine01:mgmt-vrf:/home/jvp# net show bgp sum show bgp ipv4 unicast summary ============================= BGP router identifier 10.100.0.1, local AS number 65200 vrf-id 0 BGP table version 27 RIB entries 35, using 5320 bytes of memory Peers 5, using 96 KiB of memory Peer groups 1, using 64 bytes of memory Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd leaf01(swp3) 4 65201 22607 22608 0 0 0 18:49:17 5 leaf02(swp4) 4 65201 22605 22606 0 0 0 18:49:15 5 leaf03(swp5) 4 65201 22609 22610 0 0 0 18:49:24 5 leaf04(swp6) 4 65201 22608 22608 0 0 0 18:49:18 5 lateapex-gw(10.200.0.1) 4 65000 22286 22609 0 0 0 18:43:06 3 Total number of neighbors 5 [REST CLIPPED] root@spine01:mgmt-vrf:/home/jvp# net show route 0.0.0.0/0 RIB entry for 0.0.0.0/0 ======================= Routing entry for 0.0.0.0/0 Known via "bgp", distance 20, metric 0, best Last update 18:43:45 ago * 10.200.0.1, via swp1 FIB entry for 0.0.0.0/0 ======================= default via 10.200.0.1 dev swp1 proto bgp metric 20

However, remember I mentioned the EBGP multi-path not really working properly on my router? Well, it’s due to the fact that the FreeBSD kernel, by default, doesn’t support ECMP. A compile option needs to be added at build time in order to support it, and I’m not concerned enough to try and do that. My router has a very slow, 2-core CPU in it, so building a new kernel on it isn’t really high on my priority list. Since the kernel doesn’t support ECMP by default, the pre-built FRR packages for FreeBSD don’t, either. So while there are no errors thrown in BGP when I attempted to enable ECMP, only one route was installed:

lateapex-gw# show ip bgp sum

IPv4 Unicast Summary:

BGP router identifier 10.200.0.1, local AS number 65000 vrf-id 0

BGP table version 18

RIB entries 35, using 5600 bytes of memory

Peers 2, using 27 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd

10.200.0.2 4 65200 22765 22439 0 0 0 18:57:50 16

10.200.0.3 4 65200 22765 22439 0 0 0 18:57:50 17

Total number of neighbors 2

lateapex-gw# show ip bgp 172.20.0.1/32

BGP routing table entry for 172.20.0.1/32

Paths: (2 available, best #2, table Default-IP-Routing-Table)

Advertised to non peer-group peers:

10.200.0.2 10.200.0.3

65200 65201 65300

10.200.0.3 (metric 1) from 10.200.0.3 (10.100.0.2)

Origin IGP, valid, external

AddPath ID: RX 0, TX 34

Last update: Fri May 31 15:54:37 2019

65200 65201 65300

10.200.0.2 (metric 1) from 10.200.0.2 (10.100.0.1)

Origin IGP, valid, external, best

AddPath ID: RX 0, TX 17

Last update: Fri May 31 15:54:37 2019

lateapex-gw# show ip route 172.20.0.1/32

Routing entry for 172.20.0.1/32

Known via "bgp", distance 20, metric 0, best

Last update 18:58:18 ago

* 10.200.0.2, via em0

As you can see from the output above, I checked the forwarding table for the Anycast route I’ve been working with over the past few posts: 172.20.0.1/32. The router sees two BGP announcements for it, and they’re actually identical from an AS path perspective. But because I don’t have ECMP enabled in FRR (or the router’s kernel), BGP’s best path selection went all the way to the lowest router ID of 10.200.0.2.

Ultimately, that won’t make or break my lab network. If I have to reboot spine01 for some reason, or re-do its BGP, or whatever, then the peer with spine02 will take over and I’ll still be able to get to the virtual network. So BGP is still the right solution here, it’s just not quite as elegant as it would be if ECMP were supported.